I don’t know how computing works at the fundamental level.

There I said it. 🫢 🫣

And I work in AI!

My biggest strength has always been my ability to learn quickly - as a generalist. My biggest weakness has always been patience. I tend to take action because I want to learn something quicker and the idea of not knowing keeps me up at night.

So in this newsletter garnish, I want to write about the little journey I took to learn how to use Google Colab in 30 minutes to fully understand the differences in CPU vs GPU computing.

Understanding CPU vs GPU in AI: This difference is at the heart of computing

Admittedly, despite working in AI, the nuanced differences and applications of CPUs and GPUs were concepts I found daunting. My ability to demystify these technologies felt harder than explaining quantum physics to a six-year-old (which I can do 😉).

However, my impatience and my curiosity for knowledge made me plunge into the depths spending two weeks consuming over three hours of YouTube content. I checked out NVIDIA's innovations, the cornerstone of the GPU ecosystem, and the intricacies of Large Language Models (LLMs) and their computational demands. I wanted to know:

How the CPU works

How the GPU works

The differences between the two

The math involved in Gen AI algorithms

What is compute

I watched the NVIDIA CEO give a keynote on their AI chip breakthrough here:

NVIDIA is making massive breakthroughs in accelerating computing - the ability to implement software to program GPU in a customized way to drive the fastest possible compute.

I also watched this video:

This video explained mathematically why Deep Learning (AI) requires GPUs vs CPUs. I highly recommend it.

Putting theory into practice with PyTorch and CUDA

To understand the tech stack involved here, we have to talk about PyTorch and CUDA.

From the bottom of the stack to the top: the GPU → CUDA → PyTorch

CUDA is a device architecture that enables parallel computing (the type of computing that is fast in comparison to series computing). This architecture sits on top of the NVIDIA GPU.

PyTorch is an open-source ML library (amongst many) that enables data scientists to communicate with CUDA to maximize the efficiency of the GPU.

I used a Google Colab notebook to leverage CUDA and PyTorch to witness the speed of a GPU compared to a CPU.

Here is my Colab Notebook with the scripts that you can also copy and play with:

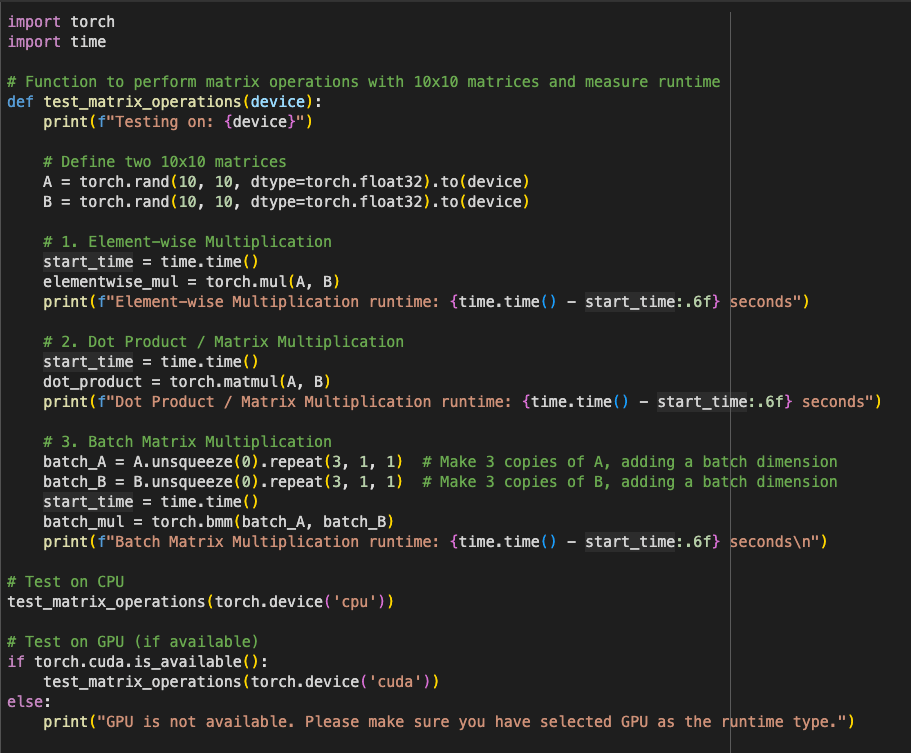

I went into ChatGPT and asked if it could write me a PyTorch script that would enable me to test various matrix multiplications on a CPU vs GPU runtime. In seconds, it gave me this:

Testing Parameters:

There were 4 program runs to test different matrix multiplication sizes:

10,000 × 10,000

1,000 X 1,000

100 X 100

10 × 10

There were 3 kinds of matrix multiplication methods

Element-wise (same size matrix)

Dot product (different size matrix - 2D matrices)

Batch (multiple matrices at once in parallel)

Two Outputs per method:

Each of the 4 matrix sizes gave CPU compute time and GPU compute time for each of the 3 methods.

The Results

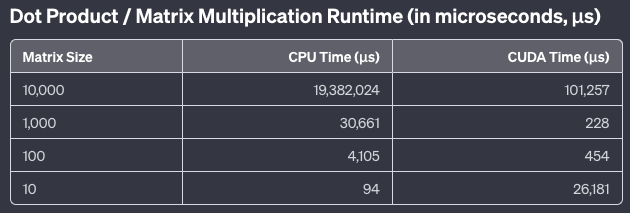

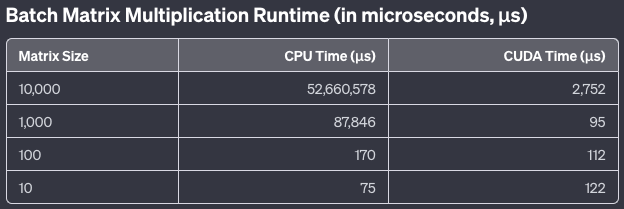

For simplicity, I removed Element-wise from the results since the dot product is enough to represent the differences.

Left: Batch multiplication, Right: Dot product multiplication

For small matrix sizes (left side of each chart), the compute time (the y-axis) is lower. For larger sizes, the compute time is larger. This is intuitive.

But let’s look at the difference between CPU vs GPU (CUDA).

At small matrix sizes (less than 100 × 100), the GPU is slower in parallel and massively slower in series (dot product). Looking at the chart on the right, the GPU computes a simple 10×10 matrix in 26,000 microseconds compared to 94 microseconds of the CPU. This is why computers are good with just CPUs!

Looking at larger matrix sizes, the CPU is very slow for dot and batch product multiplications. The CPU took 52,000,000 microseconds (52 seconds) to compute a 10,000×10,000 matrix for batch multiplication. For the dot product, it took 19,000,000 microseconds (19 seconds).

The GPU in comparison only took 200 milliseconds!!

Here are tables to visualize:

My learning about computing from this

For scalar operations and small matrices: The CPU crushes the GPU at handling computations. Its architecture is optimized for sequential tasks and proves efficient for smaller datasets where the overhead of GPU parallelization is not justified.

For large matrices and batch operations: The GPU crushes the CPU as its power in parallel processing allows for staggering accelerations in compute time. This advantage is most pronounced in batch matrix multiplications, where the GPU's ability to execute multiple operations simultaneously beats the CPU compute time by 4 orders of magnitude or 7000 times faster!

Why does this matter?

In the age of computing limitations, generative AI, and data science, the mathematics involved with neural networks are massive matrix array (tensor) multiplications. With Gen AI algorithms, we can solve some of the world’s most challenging problems and the complexity of the problem sets such as medical and genomic innovation. It requires DNA mapping which needs millions of input (math) variables. This extends to self-driving cars, climate science, weather prediction, physics, and many more.

Fun fact: I tried running a 100,000×100,000 size matrix and the CPU timed out due to no more accessible RAM.

Accelerated learning through AI

In about 30 minutes, I was able to spin open a Colab Notebook and a ChatGPT chat and ran a few simulations that taught me the power of computing when comparing the CPU with the GPU.

AI taught me about AI.

Fast forward to 5 years, multimodal models and LLMs will bring the marginal time and cost to learn down to 0. There is so much value in being able to educate mass populations on complex topics. Democritzing information will lead to a more rapid growth in innovation.

As the CPU computing growth started to plateau, our ability to learn quicker was projected to slow down. Compute is the most important human invention we have to accelerate innovation. Therefore, AI has been limited by CPU computing.

But now we have GPU innovation that will unblock AI innovation.

AI is no longer a hardware problem. It will only be a software limitation.

Okay. Now I know how computing works 😉.

Please comment or reach out if I missed something or I have more to learn!

Resources from this newsletter:

My Colab Notebook you can run yourself and see

Thanks for being a reader!

Was this forwarded to you?

See you next time! 👋